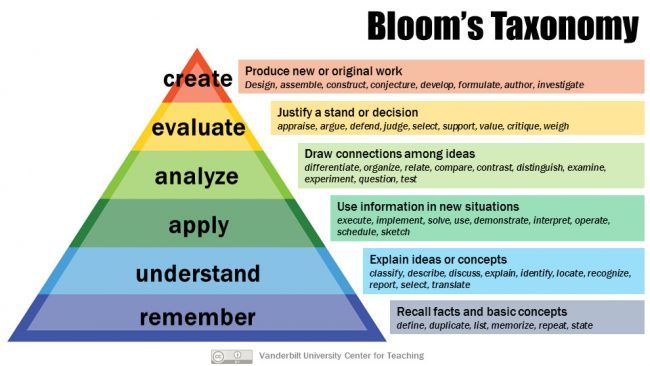

Here’s a set of insights on remote assessment in higher education, from another COVID-19 year filled with remote teaching, hybrid online / in person classes and online proctoring. I’m focusing on remote assessment – exams students can take from home. My suggestions: first, assess students through tasks that engage them in theory and skills application, analysis of evidence, and creation of knowledge; second, give students sufficient time to complete these tasks. Ask them to apply, analyze, evaluate, and create. These are activities from the higher ranks of Bloom’s taxonomy of teaching, learning and assessment.

The insights come from two classes I taught in the late Fall of 2020 and 2021 (November – December), one in the two year research master Societal Resilience (~15 students) and one in the master program Sociology (~50 students). I’ve learned a lot from teaching these classes about assessment.

In the design of courses in higher education, assessment is the third element of constructive alignment. Assessment forms the triangle with course objectives and learning activities, which should all be aligned. The pandemic produced an opportunity to transform the assessment in the exams, and take them to a higher level.

From a written exam on campus to a digital exam at home

Before the pandemic, the courses assessed student performance on campus through written exams. The written exams on campus asked students to reproduce some of the theories discussed in the course. Students were not allowed to take textbooks and readings with them to the exam. These were easy questions to grade, and they could be assessed with multiple choice questions.

Because campus rooms could not be used for exams due to COVID-19 restrictions, students had to take the exams from home. Because there was no easy way to prevent students using the readings and other materials to improve performance on this type of questions I allowed them to use these materials. Also after graduation there will be few situations in which students cannot consult sources and have to know things by heart. In their future jobs, being able to apply and analyze ideas and evaluate evidence is more important for graduates than simply remembering facts and figures. Assessing students on these higher order skills is a better practice in higher education.

Exam questions engaging students in higher learning activities

In both courses, assessment focused on the application of theories to new cases and data. The exam questions test students’ performance in understanding ideas and concepts, applying them to new materials, analyzing connections between ideas and materials, and evaluating arguments based on theories and results. The questions do not test recall of facts and concepts. To answer the questions, students are allowed to consult the readings, slides, and other materials available on the web. The questions presuppose that students understand the theories and hypotheses discussed in the readings and in course meetings.

The prototypical question starts with a piece of new material: a quote, a cartoon, a news item, a table or a figure from an article or a piece of output not discussed in class. Students interpret the new material and explain it from theories and concepts covered in readings and class meetings.

Here’s an example of this type of question.

Income and wealth inequality are not the same. Explain the difference between the two concepts. Describe the degree of income and wealth inequality in the Netherlands, using statistics from the World Inequality Database https://wid.world/country/netherlands/

Questions of a second type work in the reverse order, and ask students to first draw connections between theories and hypotheses, and then invite students to present examples from new materials collect themselves.

Here’s an example of this type of question.

- State two hypotheses about the difference in the level of civic voluntarism between countries with a relatively high and a relatively low proportion of university graduates. Base one hypothesis on the absolute education model, and the other on the relative education model.

- Compare the level of civic voluntarism for three countries. Which hypothesis that you stated in question a is supported by the differences in civic voluntarism between the three countries?

Questions of a third type ask students to apply, analyze, and evaluate existing theoretical explanations, or produce a new explanation. These questions presuppose that students understand the logic of theory building and are able to find flaws in explanations and repair them.

Select two perspectives on resilience that we have discussed in the course, and contrast them in the case of Dutch approach to COVID-19. What kind of research questions would be asked in the two perspectives? Which aspects emerge as important from the two perspectives? Are they complementary or mutually exclusive? Explain which perspective is most fruitful in your view.

Investment required

The creation and grading of exams with the type of questions above takes time – certainly more time than multiple choice exams. For each exam, I’ve spent about one 8 hour work day to create them, and about 20 minutes per candidate grading. Taken together, that is a lot of time, and you may not be able or willing to invest that much. It will not be feasible for courses with larger numbers of students. It’s hard to automate grading exams with the type of questions that require higher order learning. On the other hand, I’ve found that these exams are more fun to create, and that they’re also more fun to grade. Students found the questions more challenging, but also more interesting.

Aligning assessment with learning objectives

These types of exam questions do not test the ability of students to memorize and reproduce concepts and theories. Instead, they test students’ understanding, and their ability to apply and analyze ideas, to evaluate ideas and evidence, and create new predictions. The higher order exam questions were aligned with the objectives of both classes.

Foundations of Societal Resilience is a pure theory class, in which students encounter different disciplinary perspectives on resilience. The course manual is here: https://renebekkers.files.wordpress.com/2022/02/2021-course-manual-foundations-of-societal-resilience.pdf. Students read a lot in the course, learn concepts, distinguish disciplinary approaches, and reason from theories. The course does not have an empirical component. I’ve posted the exam for this course here: https://renebekkers.files.wordpress.com/2022/02/2021-fsr-exam-assignments.pdf.

Inequality and Conflict in Societal Participation is a combined theory and data analysis class, in which students learn about societal value change over time, and learn to analyze survey data from the World Values Survey. The course manual is here: https://renebekkers.files.wordpress.com/2022/02/course-manual-social-inequality-2021-2022.pdf. Students read quite a bit, and learn social science theories. In addition, students write a short paper about an empirical data analysis. I’ve posted the exam for this course here: https://renebekkers.files.wordpress.com/2022/02/2021-icsp-exam-assignments.pdf.

Giving students enough time to answer the questions

The challenging questions created dissatisfaction among students under time pressure. To make it infeasible for students to collaborate with each other while not under surveillance I created time pressure by allowing a limited amount of time for each question. The time available was scarcely enough to answer all questions. There was no opportunity to meaningfully exchange ideas or insights without losing time writing. I’ve tried this twice, with very clear results: don’t do this. Students dislike it. You’re testing a set of skills that are very different from the skills in the learning objectives of the course. The ability to quickly read abstracts, assess and classify ideas embedded in new texts in English is related to working memory and processing speed, and gives native speakers of English an advantage. Also students who are more able to handle stress, keep calm, and set priorities do better under time pressure, while improving these abilities are not learning objectives of the course. With time pressure, students cannot demonstrate what they have learned.

In course evaluation surveys, students suggested giving an entire day to complete the exam. This would allow students to give more detailed and thought-through answers, that allow students to better demonstrate their abilities to engage in higher learning. On the other hand, it would create more opportunities to share ideas and texts before submitting them, not only with each other, but also with other helpers. Also it would make the exam more similar to one of the other assignments in the course, in which students wrote an essay about a topic of their own choice. Therefore in the resit of the exams I reduced the number of questions, so students had more time to answer each question. The time limit of a regular exam (2 hours and 15 minutes) served to reduce the opportunity for collaboration.

Context and technicalities

These insights come from teaching courses at the master’s level. I understand the argument that bachelor (BA) students should learn elementary concepts and facts first before they can apply theories, analyze cases and create new insights. For master (MA) level students the higher level tasks are certainly more appropriate. They are more challenging and more fun for students to complete for BA and MA students alike.

At VU Amsterdam we have a digital platform for exams (TestVision). It’s a bit tedious in creating exams and test questions, but once you know your way around the system, it works quite efficiently. The platform is not connected with the electronic learning environment we use at VU (Canvas). The university contracted online proctoring services (Proctorio) to facilitate students taking exams off-campus.

I first tried exams through the online learning environment because of two advantages: students know their way around it because they use it on a daily basis, and the platform allows for a plagiarism check on the materials students submit. The disadvantage of the online learning environment is that it cannot handle online proctoring services that the university uses (Proctorio).

To help students prepare the exam, I provided the questions of last year’s exam, which had a similar design. In a meeting with students before one of the exams I explained the form of the exam, and asked them for suggestions to prevent plagiarism and collaboration. The student group appreciated being consulted, and suggested a code of honor. All students completed it. Indeed the plagiarism check flagged no one’s answers. The plagiarism check did produce a large number of false positives. Students who had copied the exam questions in the materials they submitted were flagged.

On Canvas, it is a good idea to set the grading policy to ‘manual’, so that students do not get updates for each question you grade. This will stress them out and can generate a flurry of emails before you’re even done grading.

Strategies I’ve used to let students work creatively with different versions of the same question

- Let students use a random number generator to select a single case from a set of cases.

Example: in a question about political parties, students described characteristics of the winning political party in one out of four different recent elections.

Another example: based on data from the World Values Survey and the European Values Survey, I created a table with scores for 129 different countries in three groups of 43 countries each. The random number generator determined which three countries the students compared.

2. Ask students to discuss their own context in the answer to a question.

Example: in a question about political socialization, students described the difference between the level of education of their parents and their own level of education.

Another example: on the website of Statistics Netherlands, students looked up statistics of the neighborhood in which they are currently living, and compared them with another (fixed) neighborhood.